Since ChatGPT’s flashy debut almost two years ago, generative AI has been quietly embedding itself into various types of software. These systems, capable of producing text, video, music, and images from natural language commands, are already enhancing search engines, customer service bots, editing software, and more.

Step by step, we’re beginning to see their future: not just as general-purpose entities like ChatGPT, but as tools that can enrich the software we use every day. Google’s NotebookLM is the latest example – an experimental tool designed to help organize notes, search for new information, interact with content, summarize it, and more.

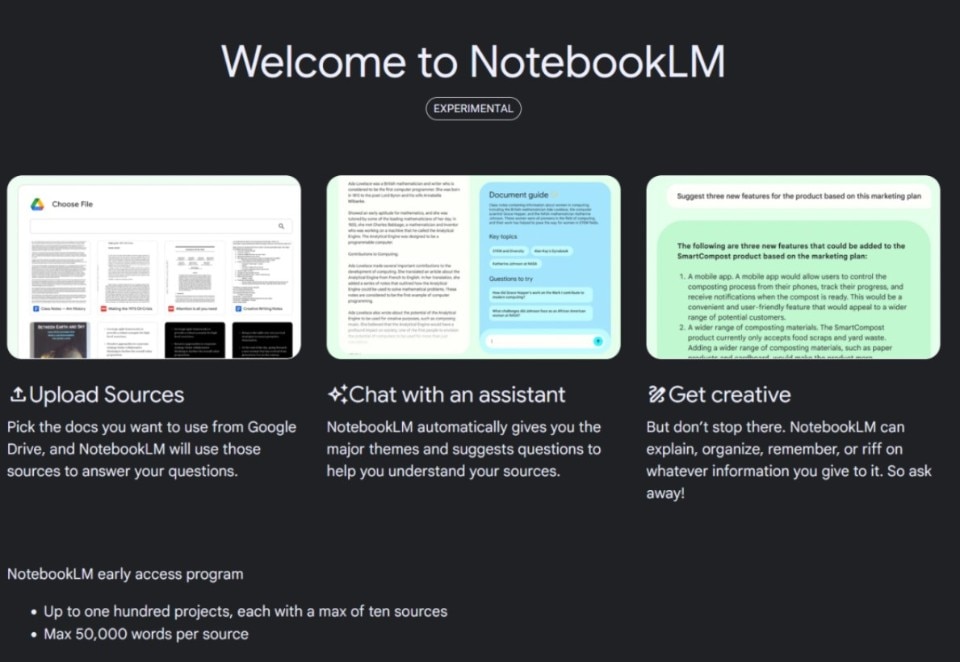

In essence, it’s a tool for the research phase, aiding in managing notes, analyzing information, and diving deeper into specific topics. So, how does NotebookLM work? To use it, simply go to the link, check out the examples, and start uploading the documents you want to work with.

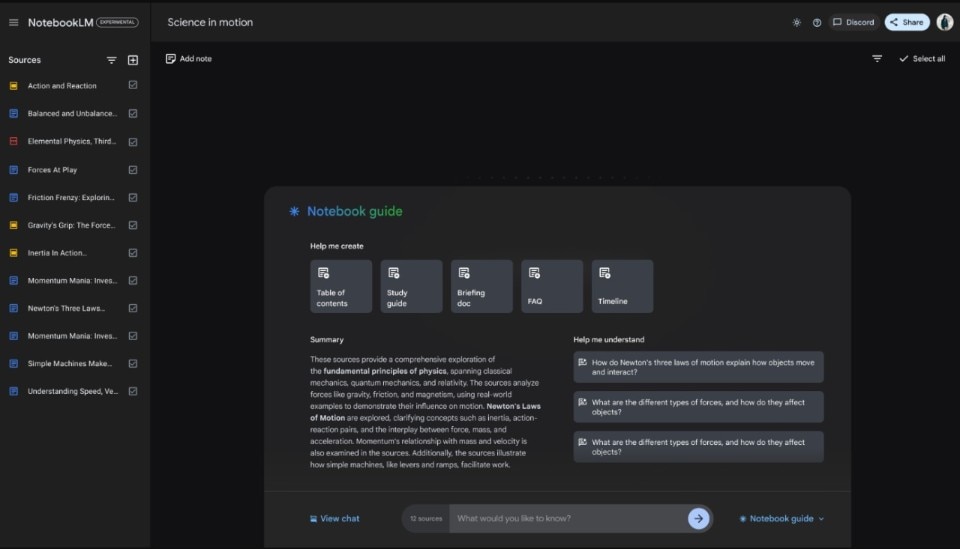

I first used NotebookLM after attending a conference on lunar settlement plans. From the homepage, I created a new notebook titled “Living on the Moon.” Then, I uploaded the messy notes I had taken into the “Sources” section.

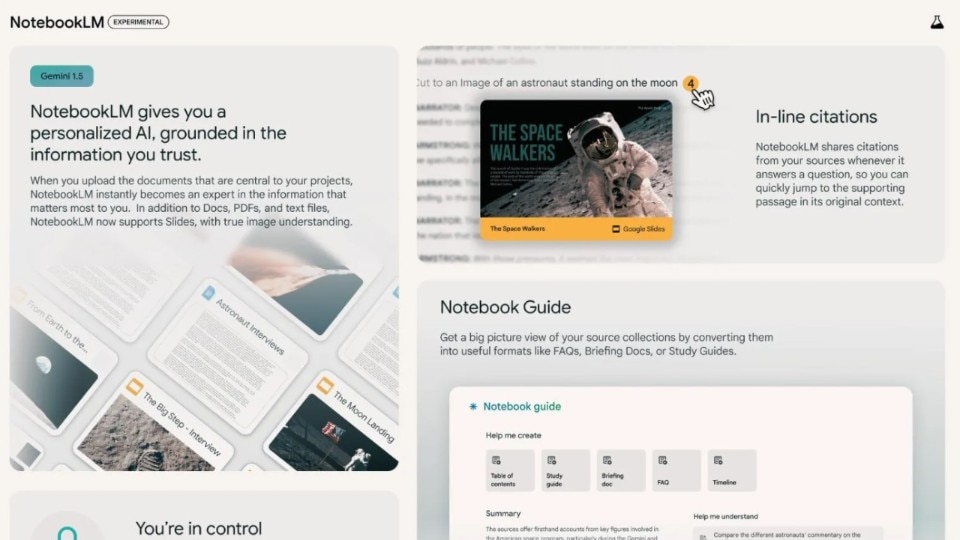

You can paste text you already have, upload a PDF or a Google Drive document (including slides), or enter a URL that the system will process. The magic begins here: even though my notes were chaotic, NotebookLM created a summary that accurately captured the key points. It also generated a list of main topics that I could click on to explore further.

It helps organize your information, extract key passages, and create diagrams to track your progress and identify gaps.

The chat feature on the right lets you ask NotebookLM questions.Unlike ChatGPT, NotebookLM bases its responses only on the provided material. For instance, from my notes, I asked for three critical issues in lunar habitat design, and it delivered: radiation protection, differing design philosophies, and the impracticality of glass domes in sci-fi.

Each time you create a new outline, get insights, or analyze a specific paragraph, you can save it as a new note in the project. Your project then displays sources on the left, your notes in the center, and the chat at the bottom for continuous note creation.

To dig deeper, I uploaded a Nature paper on space psychology and an old article on Mars colonization (back when we were still listening to Elon Musk’s promises). This created a comprehensive track, from planned space missions to the psychological and physical risks of long journeys to other planets, challenges like radiation, potential solutions, and top habitation projects. Impressive.

However, NotebookLM isn’t going to write your article, thesis, or essay for you. It helps organize your information, extract key passages, and create diagrams to track your progress and identify gaps.

But there are some weaknesses. Despite its touted intuitiveness, it feels cluttered. Notes lack direct links to their sources and appear in a row in the center of the page, making them hard to read and find. Sources are relegated to the left, out of immediate reach. They should be central, with notes as enrichment (at least this was my impression after a few hours of fumbling around and feeling confused).

In essence, it’s a tool for the research phase, aiding in managing notes, analyzing information, and diving deeper into specific topics.

And then there’s the usual generative AI issue: errors and misinformation. NotebookLM misidentified the thickness of regolith (a material that covers Mars or the Moon and can be used to cover buildings) needed for radiation protection and mischaracterized a modular housing system from the conference.

Another issue is that it aggregates the information you provide, potentially compounding errors. Verifying its output can be as time-consuming as doing the work yourself (especially when we use so many sources).

In short, the central question is: How can I use a tool I cannot fully trust? If I have to verify every piece of information it generates, won’t I end up doing the work myself first? And what are the consequences when someone inevitably decides to blindly rely on a system prone to errors?

Currently, NotebookLM is experimental – buggy, clumsy, and not yet solving generative AI’s inherent issues. But Google’s vision is promising: when (and if) these limitations are overcome, NotebookLM (possibly integrated into Drive) could become an indispensable tool for specific tasks.