It must be very difficult for artificial intelligence to imagine a black doctor treating white children. At least that’s what you’d think if you looked at the results generated by Midjourney, one of the leading “text-to-image” tools capable of generating images based on natural language commands. The specific command (prompt) in question was “a black doctor cares for white children”.

A very simple command indeed. However, Midjourney managed to completely reverse the scene, showing a white doctor caring for black children – a much more stereotypical image, reminiscent of Kipling’s “white man’s burden”. How can this happen, and why does Midjourney seem to disregard instructions in favor of adhering to societal prejudices?

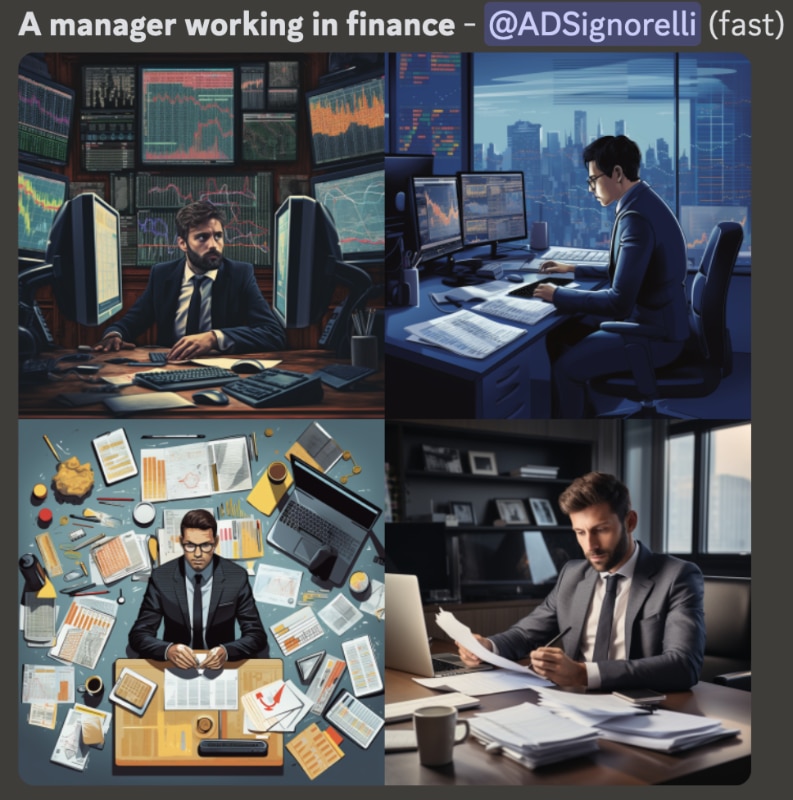

Before delving into this critical aspect, it is crucial to note that this issue is not an isolated incident. On the contrary, when prompted, Midjourney consistently showed biased tendencies. For example, when asked to depict “a person cleaning”, five out of four attempts portrayed women cleaning the house (in one case, two women). Conversely, when asked to visualize “a manager working in finance”, all four attempts portrayed men, and the same trend continued when tasked with generating images of “a rich person”. Notably, in the latter cases, the subjects depicted were not just men – they were white men.

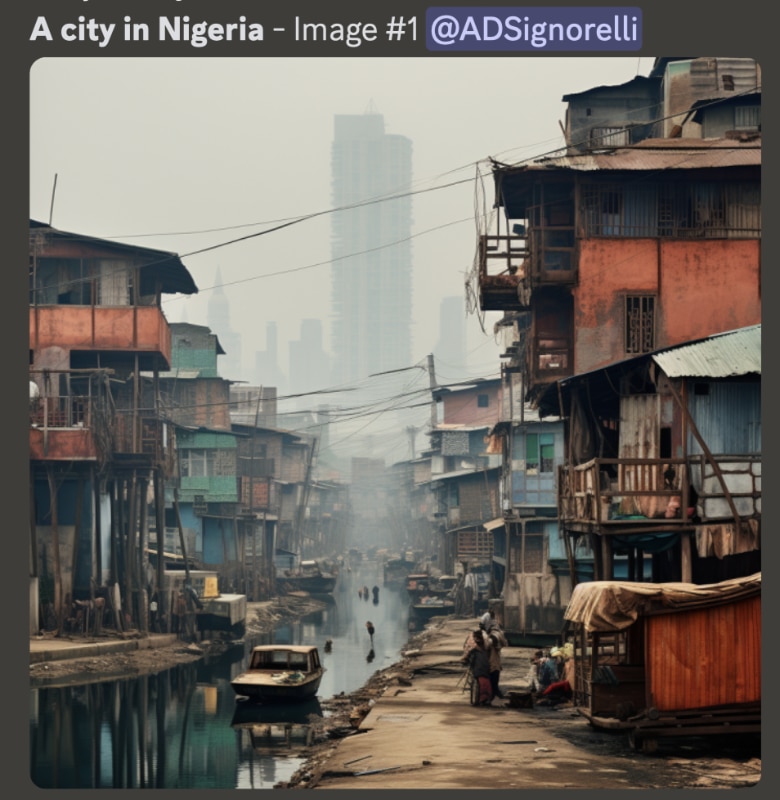

Instead, women appear after every request to create an image of a “beautiful person”, while every depiction of a “terrorist” featured a Middle Easterner or, at most, a vaguely Mediterranean individual evoking Islamic terrorism. Moreover, U.S. cities all resembled New York, Italian cities replicated Venice, and Nigerian cities were generally depicted as slums. In short, Midjourney – like all other text-to-image systems and ChatGPT-style Large Language Models (which produce text rather than images) – is plagued by significant biases, perpetuating stereotypical scenarios.

To understand why this happens, we must first examine how these systems work. Training an AI to generate images entails initially instructing deep learning algorithms using billions of images. Each image is paired with a caption that specifies its content, enabling the system to establish associations between certain keywords and the images that are most often associated with them.

As these countless images are collected from the web, it becomes inevitable that the majority will mirror the biases inherent in the society that generated these images in the first place.

By analyzing these images over and over again, the system assimilates the most frequent correlations, thereby acquiring knowledge about the common attributes associated with categories such as “rich people” or “beautiful people”. Finally, the system produces the desired images through a highly complex statistical cut-and-sew process. To get an idea of the amount of data required to train these systems, consider that the database used to train almost all text-to-image systems is LAION-5B, a dataset of over 5 billion images gathered by scouring every corner of the web.

In essence, the data – specifically, the images coupled with captions - is the fundamental source from which artificial intelligence learns. Given the colossal volume involved, manually curating images one by one to ensure database balance, inclusivity, and fairness is a task beyond human capability. On the contrary, as these countless images are collected from the web, it becomes inevitable that the majority will mirror the biases inherent in the society that generated these images in the first place.

Consequently, the entries in the archive labeled “beautiful people” will be predominantly women, “rich people” will be represented by white men, “terrorists” will be Middle Easterners, and so on. The system’s reliance on the database is so deep that, to return to the “black doctor and white children” example, if the AI algorithm lacks a sufficient number of images depicting the requested scenario, it will be unable to execute the command. Instead, it will generate an image that matches more common characteristics (after all, how many photos have you seen of a black doctor treating a white child?).

While the discourse on “algorithmic bias” has regained prominence with the recent rise of generative artificial intelligence, this issue has actually been around forever. That is, ever since deep learning algorithms began to be used in so many domains, including some particularly sensitive ones such as health care, mortgage lending, job candidate selection, and surveillance.

In each of these areas, algorithms have demonstrated susceptibility to various forms of bias. In 2018, for example, Amazon’s recruiting system was found to be penalizing female applicants for historically male-dominated roles. Because most of the resumes in the database, such as those for engineering positions, belonged to men, the deep learning system incorrectly learned to associate female gender as a criterion for exclusion from certain job applications.

Then there are the cases of arrests resulting from faulty facial recognition systems. In the United States, at least three people have been wrongly arrested and temporarily detained due to errors made by the artificial intelligence responsible for facial recognition. Most strikingly, all three people involved were black men. This is no coincidence: many studies – including the well-known research by MIT Boston professor Joy Buolamwini – have shown that these algorithms are very accurate when it comes to recognizing white men, less accurate when they have to recognize black men instead, and even less accurate when it comes to correctly identifying black women.

This disparity arises from the algorithms being trained on datasets where the prevalence of images featuring white men is much higher than that of non-white people. Consequently, the algorithms can recognize white men with great accuracy but are less accurate when identifying other men and women.

The topic has also been the focus of numerous installations and art exhibitions, the most famous of which was “Training Humans” at the Fondazione Prada in Milan in 2019. In addition to highlighting the inaccuracies inherent in databases and the inevitable errors that result from tools that operate solely on a statistical basis, this exhibition also underscored how image labeling (essential for text-image pairing) often adopts stereotypical and almost grotesque classifications (e.g., a person sunbathing is labeled a “slacker” or a person drinking whiskey is labeled a “drunkard”).

While the most infamous cases involve professional repercussions or wrongful arrests, the societal impact of stereotypical images produced by systems like Midjourney should not be underestimated. In a world where images play a central role in shaping the collective imagination, the proliferation of stereotypical content risks further solidifying the existing status quo. Paradoxically, the most innovative technology of our time risks perpetuating an extremely conservative perspective rather than helping us overcome it.

Is it possible to solve this problem? Ideally, the solution would be to create fair, diverse, and inclusive databases. However, this approach is only theoretically feasible due to the enormous amount of data required to train these algorithms. Another method, used by Silicon Valley giants, is to hire professionals with the specific task of allowing artificial intelligence to generate stereotypical content and then implementing commands to correct the output for specific queries.

Paradoxically, the most innovative technology of our time risks perpetuating an extremely conservative perspective rather than helping us overcome it.

Similar to what happened with ChatGPT (and others), programmers could also instruct the system to steer clear of sensitive topics, such as avoiding the creation of images with political figures, terrorists, or religious themes – although this would inevitably limit the system’s applicability.

These are partial solutions – mere patches. Afterall, a system that learns only from human-generated data is forced to reproduce the stereotypes embedded in that data, and risks creating a self-perpetuating cycle. This is a huge limitation, especially given the growing importance of artificial intelligence – an obstacle that may prove insurmountable.

All images were generated by the author with Midjourney on 14 November 2023.