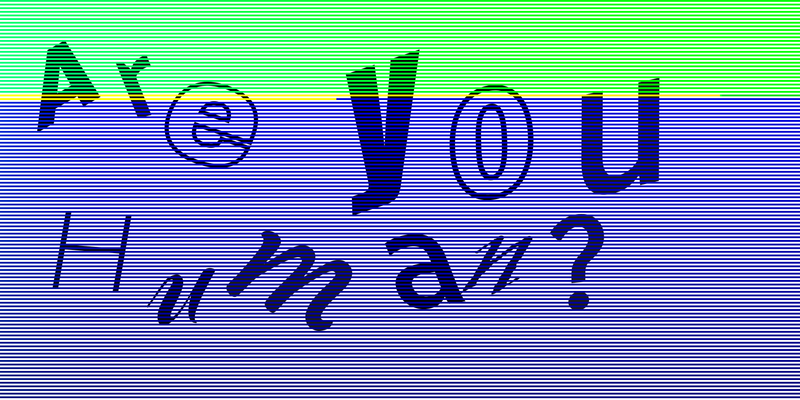

The ubiquitous cameras in our cities and increasingly sophisticated biometric technologies keep us safe, but they can also violate our rights as citizens. These devices collect huge amounts of personal data – often without our consent – but we are unaware of its destination and use. The ever-present smartphones, voice assistants, wearable devices, and many other ‘smart’ objects that the Internet of Things (IoT) has brought into our privacy make our lives easier by connecting everything, but they also track our movements and actions, both online and offline.

The data that these everyday devices extract from us is, in the words of Shoshana Zuboff, the “oil” of a new form of capitalism, something the scholar defines as “surveillance capitalism”, which is also the title of her acclaimed book where she explains how new means of production, i.e. artificial intelligence, process this raw material into what feeds the new, rapidly evolving market: predictions of our behavior.

Another key issue is the discriminatory component of data – a problem that stems from the illusion that these devices are objective and free of the biases we instinctively ascribe to humans as opposed to machines. Kate Crawford – artificial intelligence expert and curator of the exhibition ‘Excavating AI: The Politics of Images in Machine Learning Training Sets’ – explains that data are political interventions. They are not as neutral and objective as they seem but are instead organized into datasets that inevitably embody the ideological biases of those who create them. Their management can therefore lead to the proliferation of ‘automated discriminations’ based on race, gender, social status, financial situation, and even lifestyle, taste, and personality.

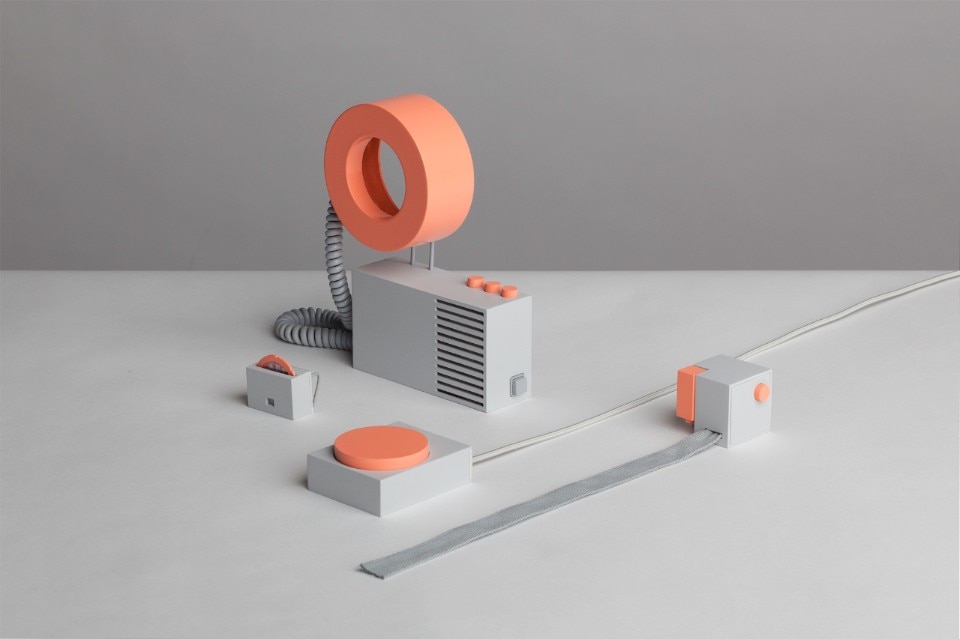

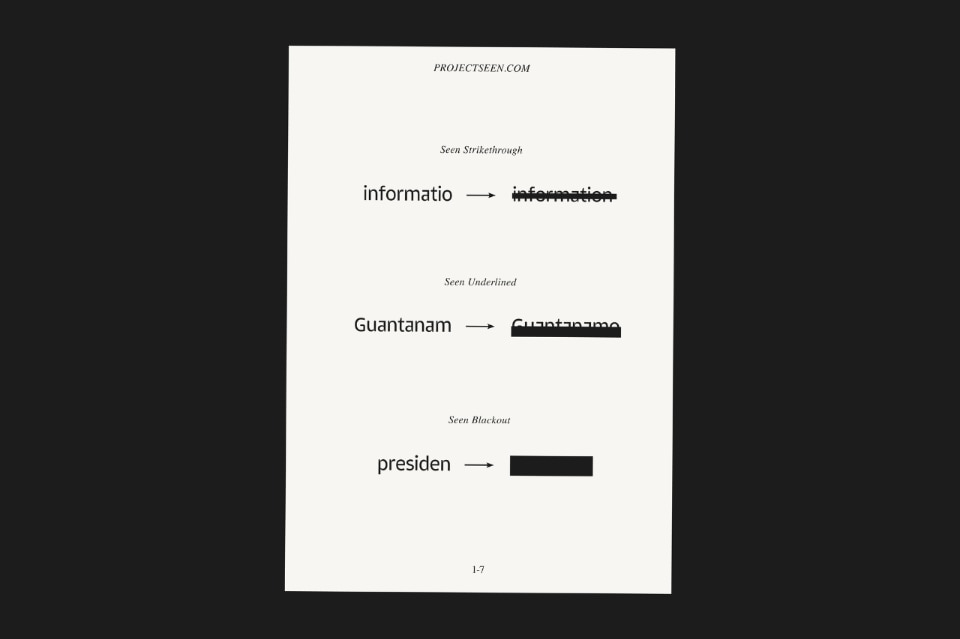

Before this complex and ever-changing scenario, design has not sat idly by. In addition to conceiving simple objects such as “privacy shutters” – webcam blocking devices that are already often built into laptops – design, in its critical dimension, has proposed provocative projects that aim first and foremost to raise awareness of the threat that digital tracking poses to privacy. However, these projects can also serve as marketable objects that users can adopt to escape the control of their devices, thus protecting themselves without retreating into an anachronistic anti-digital sanctuary.

The projects use different methods to protect individual privacy. Some, for example, isolate the user from tracking systems, while others interfere with the various technologies through data overload or falsification.

The gallery presents a selection of projects that focus on two main themes – defense against facial recognition and protection against the surveillance of smart objects – thus addressing both the public and private spheres of today’s citizens.

"The Wings", the triple-certified building of the future

The Wings is an innovative complex that combines futuristic design and sustainability. With BREEAM Excellent, WELL Gold, and DGNB Gold certifications, the building houses offices, a hotel, and common areas, utilizing advanced solutions such as AGC Stopray glass for energy efficiency.